MySQL Group Replication: Difference between revisions

mNo edit summary |

|||

| (55 intermediate revisions by the same user not shown) | |||

| Line 28: | Line 28: | ||

LXC Containers | LXC Containers | ||

- db1 (MySQL8.0) | - db1 (MySQL8.0) (10.0.3.202) | ||

- db2 (MySQL8.0) | - db2 (MySQL8.0) (10.0.3.246) | ||

- db3 (MySQL8.0) | - db3 (MySQL8.0) (10.0.3.175) | ||

- db4 (MySQL8.0) | - db4 (MySQL8.0) (10.0.3.26) | ||

- web1 ( | - web1 (Apps) (10.0.3.159) | ||

</pre> | </pre> | ||

| Line 38: | Line 38: | ||

=Introduction= | =Introduction= | ||

This article explains how to create a MySQL8.0 HA cluster using group replication and MySQL router. It is important to note that I am using an Ubuntu 20.04 LTS server edition as the LXC host OS and have created 4 Ubuntu 20.04 containers in it and installed MySQL 8.0 from the repositories. The containers have private IPs and they are not reachable on public network. | This article explains how to create a MySQL8.0 HA cluster using group replication and MySQL router. It is important to note that I am using an '''Ubuntu 20.04 LTS''' server edition as the LXC host OS and have created 4 Ubuntu 20.04 containers in it and installed MySQL 8.0 from the repositories. The containers have private IPs and they are not reachable on public network. An nginx reverse proxy is setup on the LXC host server so that applications, setup on the containers, are accessible on port 443 or port 80. 'How to setup Nginx as a reverse Proxy' can be found [[NginX as Reverse Proxy | here]]. | ||

= | =Environment preparation= | ||

Container details are mentioned below. Host entries have been made into respective servers so that the servers can reach every other server using a hostname instead of an IP. | Container details are mentioned below. Host entries have been made into respective servers so that the servers can reach every other server using a hostname instead of an IP. | ||

{| class="wikitable sortable" | {| class="wikitable sortable" | ||

|- | |- | ||

! # !! Container !! Hostname !! IP Address !! Software installed | ! # !! Container/Host !! Hostname !! IP Address !! Software installed | ||

|- | |- | ||

| 1 || | | 1 || LXC Host || infrabase1 || 10.1.65.9 || lxc, nginx, mysql-shell | ||

|- | |- | ||

| 2 || Container | | 2 || Container 1 || db1 || 10.0.3.202 || mysql server 8.0 | ||

|- | |- | ||

| 3 || Container | | 3 || Container 2 || db2 || 10.0.3.246 || mysql server 8.0 | ||

|- | |- | ||

| 4 || Container | | 4 || Container 3 || db3 || 10.0.3.175 || mysql server 8.0 | ||

|- | |- | ||

| 5 || Container 5 || web1 || 10.0.3.159 || mysql-client, apache2, php7.4, wordpress, mysqlrouter | | 5 || Container 4 || db4 || 10.0.3.26 || mysql server 8.0 | ||

|- | |||

| 6 || Container 5 || web1 || 10.0.3.159 || mysql-client, apache2, php7.4, wordpress, mysqlrouter | |||

|} | |} | ||

Wordpress will be setup on web1 container and mysqlrouter will also be placed on the same application server. Wordpress will connect to the mysqlrouter like a local mysql db instance. Mysqlrouter will route it to the necessary mysql server in the cluster. | Wordpress will be setup on web1 container and mysqlrouter will also be placed on the same application server. Wordpress will connect to the mysqlrouter like a local mysql db instance. Mysqlrouter will route it to the necessary mysql server in the cluster. | ||

The lxc host server (10.1.65.9) will be used to connect to the db servers using mysql-shell. Group replication implementation will be done using the mysql-shell only. No files will be manually edited. Installing mysql-shell will be explained in the below sections. | |||

=Preparing MySQL Servers= | =Preparing MySQL Servers= | ||

The below steps have to be done on all | The below steps have to be done on all three servers without exception | ||

==Install mysql server 8.0== | |||

*Install from the default repositories | |||

<pre> | <pre> | ||

root@db1:/# apt install mysql-server nano | root@db1:/# apt install mysql-server nano | ||

| Line 72: | Line 77: | ||

If you are doing a POC, answer 'N' for the first question. It asks if password complexity should be enabled. You can keep short passwords so that it is simpler to try out in a POC. For a production environment you should enable password complexity. Answer 'Y' for all further questions. This will disable remote 'root login' on the server and also remove anonymous users and test databases from the MySql server. | If you are doing a POC, answer 'N' for the first question. It asks if password complexity should be enabled. You can keep short passwords so that it is simpler to try out in a POC. For a production environment you should enable password complexity. Answer 'Y' for all further questions. This will disable remote 'root login' on the server and also remove anonymous users and test databases from the MySql server. | ||

==Create user for cluster management== | |||

Login to sql server and create a user and grant all permissions. Since this is a POC, you can be a bit liberal with the permissions, otherwise checkout the documentation on permissions for group replication user on MySQL website. | Login to sql server and create a user and grant all permissions. Since this is a POC, you can be a bit liberal with the permissions, otherwise checkout the documentation on permissions for group replication user on MySQL website. | ||

<pre> | <pre> | ||

| Line 81: | Line 87: | ||

mysql> EXIT; | mysql> EXIT; | ||

</pre> | </pre> | ||

==Allow remote database connection== | |||

<pre> | <pre> | ||

root@db1:/# nano /etc/mysql/mysql.conf.d/mysqld.cnf | root@db1:/# nano /etc/mysql/mysql.conf.d/mysqld.cnf | ||

| Line 87: | Line 93: | ||

Comment the bind address directive so that this database can be connected from other sources. | Comment the bind address directive so that this database can be connected from other sources. | ||

<pre> | <pre> | ||

. | . | ||

#bind-address = 127.0.0.1 | #bind-address = 127.0.0.1 | ||

. | . | ||

</pre> | </pre> | ||

Repeat the above steps in the other servers, exactly in the same manner. Do not change password of the user or the username. In POC keep it same otherwise they can be different. | |||

=Setting up MySQL Shell= | =Setting up MySQL Shell= | ||

= | We shall be setting up the mysql shell on the LXC host server (10.1.65.9) from where the lxc containers running the mysql servers are reachable. Group replication will be setup using the mysql-shell program. Group replication can also be setup without the mysql-shell, however several modifications are required in the configuration files. Mysql-shell automates the configuration and helps in managing the cluster as well. | ||

It is '''important''' to note that Ubuntu 20.04 does not have a mysql-shell binary in the default apt repository. However, it is available as a snap. If you install mysql-shell snap, then javascript is not enabled by default for the mysql-shell installed through snap. For setting up mysql cluster mysql-shell needs javascript. We therefore download the mysql-shell deb file from mysql website and manually install it on LXC host server. | |||

<pre> | |||

kedar@infrabase1:~$ wget https://dev.mysql.com/get/Downloads/MySQL-Shell/mysql-shell_8.0.20-1ubuntu20.04_amd64.deb | |||

kedar@infrabase1:~$ sudo dpkg -i mysql-shell_8.0.20-1ubuntu20.04_amd64.deb | |||

</pre> | |||

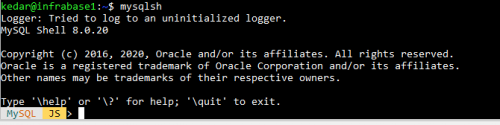

Once it is installed, you should be able to connect to the db servers using 'clustermgr' credentials. Use the following command | |||

<pre> | |||

kedar@infrabase1:~$ mysqlsh | |||

</pre> | |||

It should look like below | |||

[[File: Mysqlsh.png| 500 px | left]] <br /><br /><br /><br /><br /><br /> | |||

=Setting up the cluster= | |||

==Prepare servers to add in cluster== | |||

* Log into the first database server(db1) through the mysql shell from the lxc host server (10.1.65.9). | |||

<pre> | |||

kedar@infrabase1:~$ mysqlsh | |||

</pre> | |||

At the shell enter the following | |||

<pre> | |||

MySQL-js> dba.checkInstanceConfiguration('clustermgr@db1:3306') | |||

MySQL-js> dba.configureLocalInstance('clustermgr@db1:3306') | |||

By pressing ^D, you can exit the mysql-shell | |||

</pre> | |||

Ssh into the mysql server and restart the db1 server. Check the status of the server and ensure mysql server is running. | |||

<pre> | |||

root@db1:/# systemctl restart mysql | |||

root@db1:/# systemctl status mysql | |||

</pre> | |||

Repeat these steps for all of the remaining servers | |||

==Create the cluster== | |||

===First DB Server=== | |||

Connect to the first db server using the mysql shell from the lxc host server | |||

<pre> | |||

kedar@infrabase1:~$ mysqlsh | |||

MySQL-js> shell.connect('clustermgr@db1:3306') | |||

MySQL| db1:3306 ssl -js> cluster = dba.createCluster('myCluster'); | |||

MySQL| db1:3306 ssl -js> cluster.status() | |||

</pre> | |||

It will appear as below | |||

<pre> | |||

{ | |||

"clusterName": "myCluster", | |||

"defaultReplicaSet": { | |||

"name": "default", | |||

"primary": "db1:3306", | |||

"ssl": "REQUIRED", | |||

"status": "OK_NO_TOLERANCE", | |||

"statusText": "Cluster is NOT tolerant to any failures.", | |||

"topology": { | |||

"db1:3306": { | |||

"address": "db1:3306", | |||

"mode": "R/W", | |||

"readReplicas": {}, | |||

"replicationLag": null, | |||

"role": "HA", | |||

"status": "ONLINE", | |||

"version": "8.0.20" | |||

} | |||

}, | |||

"topologyMode": "Single-Primary" | |||

}, | |||

"groupInformationSourceMember": "db1:3306" | |||

} | |||

</pre> | |||

===Second DB Server=== | |||

Connect to the second db server using the mysql shell from the lxc host server | |||

<pre> | |||

MySQL|db1:3306 ssl|JS> cluster.addInstance('clustermgr@db2:3306'); | |||

MySQL| db1:3306 ssl -js> cluster.status() | |||

</pre> | |||

The cluster status will appear as below | |||

<pre> | |||

{ | |||

"clusterName": "myCluster", | |||

"defaultReplicaSet": { | |||

"name": "default", | |||

"primary": "db1:3306", | |||

"ssl": "REQUIRED", | |||

"status": "OK_NO_TOLERANCE", | |||

"statusText": "Cluster is NOT tolerant to any failures.", | |||

"topology": { | |||

"db1:3306": { | |||

"address": "db1:3306", | |||

"mode": "R/W", | |||

"readReplicas": {}, | |||

"replicationLag": null, | |||

"role": "HA", | |||

"status": "ONLINE", | |||

"version": "8.0.20" | |||

}, | |||

"db2:3306": { | |||

"address": "db2:3306", | |||

"mode": "R/O", | |||

"readReplicas": {}, | |||

"replicationLag": null, | |||

"role": "HA", | |||

"status": "ONLINE", | |||

"version": "8.0.20" | |||

} | |||

}, | |||

"topologyMode": "Single-Primary" | |||

}, | |||

"groupInformationSourceMember": "db1:3306" | |||

} | |||

</pre> | |||

===Third DB Server=== | |||

Connect to the third db server using the mysql shell from the lxc host server | |||

<pre> | |||

MySQL|db1:3306 ssl|JS> cluster.addInstance('clustermgr@db3:3306'); | |||

MySQL| db1:3306 ssl -js> cluster.status() | |||

</pre> | |||

==Check cluster status== | |||

The cluster status will appear as below | |||

<pre> | |||

{ | |||

"clusterName": "myCluster", | |||

"defaultReplicaSet": { | |||

"name": "default", | |||

"primary": "db1:3306", | |||

"ssl": "REQUIRED", | |||

"status": "OK", | |||

"statusText": "Cluster is ONLINE and can tolerate up to ONE failure.", | |||

"topology": { | |||

"db1:3306": { | |||

"address": "db1:3306", | |||

"mode": "R/W", | |||

"readReplicas": {}, | |||

"replicationLag": null, | |||

"role": "HA", | |||

"status": "ONLINE", | |||

"version": "8.0.20" | |||

}, | |||

"db2:3306": { | |||

"address": "db2:3306", | |||

"mode": "R/O", | |||

"readReplicas": {}, | |||

"replicationLag": null, | |||

"role": "HA", | |||

"status": "ONLINE", | |||

"version": "8.0.20" | |||

}, | |||

"db3:3306": { | |||

"address": "db3:3306", | |||

"mode": "R/O", | |||

"readReplicas": {}, | |||

"replicationLag": null, | |||

"role": "HA", | |||

"status": "ONLINE", | |||

"version": "8.0.20" | |||

} | |||

}, | |||

"topologyMode": "Single-Primary" | |||

}, | |||

"groupInformationSourceMember": "db1:3306" | |||

} | |||

</pre> | |||

All three servers are added in the cluster. You can add the fourth one too in the same manner. At any given point in time, only one server will be primary and the remaining would be in read-only mode. In case of a failure, one of the two servers will become primary and will be in read/write mode and the other one will be in a read-only mode. | |||

=MySql Router & App Server= | |||

==Setting up MySQL router== | |||

*Install mysql router on the application server container (10.0.3.159) | |||

<pre> | |||

root@web1:/# apt install mysql-router | |||

root@web1:/# /bin/mysqlrouter --user=root --bootstrap clustermgr@db1:3306 -d myrouter | |||

</pre> | |||

After some time, mysqlrouter will have been installed and a folder created by the name of myrouter. In our case, we are running mysqlrouter with the user root, hence the folder will be created in "/" | |||

<pre> | |||

root@web:/# cd myrouter | |||

root@web1:/myrouter# ls -l | |||

</pre> | |||

Following content will be shown | |||

<pre> | |||

root@web1:/myrouter# ls -l | |||

total 28 | |||

drwx------ 2 root root 4096 Jun 8 17:48 data | |||

drwx------ 2 root root 4096 Jun 8 17:48 log | |||

-rw------- 1 root root 1211 Jun 8 17:48 mysqlrouter.conf | |||

-rw------- 1 root root 80 Jun 8 17:48 mysqlrouter.key | |||

drwx------ 2 root root 4096 Jun 8 17:48 run | |||

-rwx------ 1 root root 277 Jun 8 17:48 start.sh | |||

-rwx------ 1 root root 137 Jun 8 17:48 stop.sh | |||

</pre> | |||

Start the mysqlrouter with the following command | |||

<pre> | |||

root@web1:/myrouter# ./start.sh | |||

</pre> | |||

Mysql router has now been set and is ready to accept connections | |||

==Setting up App Server== | |||

*As a sample, we shall be setting up a wordpress site that will be served using an apache web server installed on this container in addition to the mysql-router. | |||

*There is a detailed howto on 'Setting up Wordpress' that can be found [[Deploying Wordpress | here]]. | |||

*'''When setting the database for wordpress, mention the database host as''' | |||

<pre> | |||

Database host: 10.0.3.159:6446 | |||

</pre> | |||

All other remaining settings remain the same for configuring the wordpress instance. All read & write requests will be made to the mysqlrouter and depending on the available database, mysqlrouter will route the requests to the database cluster. Any change in the database server (like a failure or rejoin) will automatically get updated in the mysqlrouter configuration. | |||

=Important Commands= | =Important Commands= | ||

==Check cluster status== | |||

Login through the mysql-shell to any of the servers in the cluster and run following comamnds. | |||

<pre> | |||

kedar@infrabase1:/$ mysqlsh | |||

MySQL -js> shell.connect('clustermgr@db1:3306') | |||

MySQL db1:3306 ssl |JS> var cluster = dba.getCluster() | |||

MySQL db1:3306 ssl |JS> cluster.status() | |||

</pre> | |||

==Shutting down the cluster== | |||

Shut down the read-only databases first. Assuming that db1 is the primary (read/write database) | |||

<pre> | |||

root@db3:/# systemctl stop mysql | |||

root@db2:/# systemctl stop mysql | |||

root@db1:/# systemctl stop mysql | |||

</pre> | |||

==Starting cluster after a shut down== | |||

If the cluster is started after a clean shutdown, ensure that mysql service is running on all the cluster servers. Login to any server you want to make primary using the mysql-shell. In this case, will connect to db1 using mysql-shell from the lxc host server (10.1.65.9) | |||

<pre> | |||

kedar@infrabase1:$ mysqlsh | |||

MySQL -js> shell.connect('clustermgr@db1:3306') | |||

MySQL db1:3306 ssl |JS> cluster = dba.rebootClusterFromCompleteOutage() | |||

MySQL db1:3306 ssl |JS> cluster.status() | |||

</PRE> | |||

==Switching between Single-Primary & Multi-primary== | |||

By default only one primary will be present in the cluster while all will be read-only. To make all other servers primary use the following command | |||

<pre> | |||

MySQL db1:3306 ssl |JS> cluster.switchToMultiPrimaryMode() | |||

MySQL db1:3306 ssl |JS> cluster.status() | |||

</pre> | |||

The status should be seen as below | |||

<pre> | |||

{ | |||

"clusterName": "myCluster", | |||

"defaultReplicaSet": { | |||

"name": "default", | |||

"ssl": "REQUIRED", | |||

"status": "OK", | |||

"statusText": "Cluster is ONLINE and can tolerate up to ONE failure.", | |||

"topology": { | |||

"db1:3306": { | |||

"address": "db1:3306", | |||

"mode": "R/W", | |||

"readReplicas": {}, | |||

"replicationLag": null, | |||

"role": "HA", | |||

"status": "ONLINE", | |||

"version": "8.0.20" | |||

}, | |||

"db2:3306": { | |||

"address": "db2:3306", | |||

"mode": "R/W", | |||

"readReplicas": {}, | |||

"replicationLag": null, | |||

"role": "HA", | |||

"status": "ONLINE", | |||

"version": "8.0.20" | |||

}, | |||

"db3:3306": { | |||

"address": "db3:3306", | |||

"mode": "R/W", | |||

"readReplicas": {}, | |||

"replicationLag": null, | |||

"role": "HA", | |||

"status": "ONLINE", | |||

"version": "8.0.20" | |||

} | |||

}, | |||

"topologyMode": "Multi-Primary" | |||

}, | |||

"groupInformationSourceMember": "db1:3306" | |||

} | |||

</pre> | |||

In this case if the application connects to port 6447, the connection will be load balanced, however if the application connects to port 6446, it will work only with the first primary server. | |||

To switch back to the single-primary mode, and make db1 as the primary database | |||

<pre> | |||

MySQL db1:3306 ssl |JS> cluster.switchToSinglePrimaryMode("db1:3306"); | |||

</pre> | |||

==Add a node to the cluster== | |||

This will add db4 to the existing cluster of three servers | |||

<pre> | |||

MySQL db1:3306 ssl |JS> cluster.addInstance("db4:3306") | |||

</pre> | |||

==Remove a node from the cluster== | |||

<pre> | |||

MySQL db1:3306 ssl |JS> cluster - dba.getCluster() | |||

MySQL db1:3306 ssl |JS> cluster.removeInstance("db4:3306") | |||

</pre> | |||

==Add slave server== | |||

In our case, we shall add db5 as slave of db2 server which is the cluster. It is assumed that db5 is a ubuntu 20.04 server with the same mysql server (8.0.20) installed on it. This server should not be part of the cluster when this activity is started. Add the configuration to the below file | |||

<pre> | |||

root@db5:/# nano /etc/mysql/mysql.conf.d/mysqld.cnf | |||

</pre> | |||

Add the following lines at the end of the file | |||

<pre> | |||

server-id = 25 # this should be unique | |||

gtid-mode = ON | |||

enforce-gtid-consistency = ON | |||

log-slave-updates = OFF | |||

read-only = ON | |||

super-read-only = ON | |||

expire-logs-days = 15 | |||

</pre> | |||

Save the file and | |||

<pre> | |||

root@db5:/# systemctl restart mysql | |||

</pre> | |||

On one of the cluster servers, create a replication slave user and take a full backup of all the databases and move it to the db5 server. We shall be using db2 for this purpose. ssh into db2 | |||

<pre> | |||

root@db2:/# mysql -u root -p | |||

mysql> CREATE USER 'repl'@'%' IDENTIFIED BY '123'; | |||

mysql> GRANT REPLICATION SLAVE ON *.* TO 'repl'@'%'; | |||

mysql> EXIT; | |||

root@db2:/# mysqldump - u root -p --single-transaction --master-data=1 --all-databases --triggers --routines --events > backup.sql | |||

</pre> | |||

Move the file to the db5 server. On db5 server restore the backup file | |||

<pre> | |||

root@db5:/# mysql -uroot -p < backup.sql | |||

</pre> | |||

Initiate the replication and start the slave | |||

<pre> | |||

mysql> CHANGE MASTER TO MASTER_HOST = '10.0.3.246', MASTER_USER = 'repl', MASTER_PASSWORD = '123', MASTER_AUTO_POSITION = 1; | |||

mysql> START SLAVE; | |||

mysql> SHOW SLAVE STATUS\G; | |||

</pre> | |||

=References= | |||

==Website references== | |||

*https://dev.mysql.com/doc/refman/5.7/en/mysql-innodb-cluster-working-with-cluster.html | |||

*https://severalnines.com/database-blog/mysql-innodb-cluster-80-complete-operation-walk-through-part-two | |||

*https://dev.mysql.com/doc/mysql-router/8.0/en/mysql-router-deploying-bootstrapping.html | |||

*https://dev.mysql.com/doc/mysql-router/8.0/en/mysqlrouter.html#option_mysqlrouter_conf-use-sockets | |||

*https://santhinesh.wordpress.com/tag/mysql-router/ | |||

*https://dinfratechsource.com/2019/07/22/mysql-innodb-cluster-a-hands-on-tutorial/ | |||

*https://lefred.be/content/mysql-innodb-cluster-mysql-shell-starter-guide/ | |||

*https://dinfratechsource.com/2018/11/10/mysql-innodb-cluster-a-complete-high-availability-solution-for-mysql/ | |||

*https://mysqlserverteam.com/mysql-innodb-cluster-real-world-cluster-tutorial-for-ubuntu-and-debian/ | |||

==Video references== | |||

*Part 1: https://youtu.be/CbmNWAxgMf0 | |||

*Part 2: https://youtu.be/ZPJ1KiYN4bQ | |||

*Part 3: https://youtu.be/wT66wDpsJbE | |||

*Part 4: https://youtu.be/s1Gm_CTdRuI | |||

=Closing= | =Closing= | ||

MySQL router should be installed on all application servers so that required redundancy can be achieved. | |||

[[Category: Database Management]] | |||

Latest revision as of 07:48, 3 July 2020

| LXC Host Details |

hostname: infrabase1 Network: 10.1.65.0/24 IP Address : 10.1.65.9 Subnet Mask: 255.255.255.0 Gateway: 10.1.65.1 DNS: 8.8.8.8 Reverse Proxy setup: Nginx Server OS: Ubuntu 20.04 Edition: LTS, server sudo user: kedar FTP Client: Filezilla ssh client: terminal, reminna Text editors: sublime-text User PC Details PC type: Desktop OS: Ubuntu Desktop IP Address: 10.1.65.173 sudo user:kedar LXC Containers - db1 (MySQL8.0) (10.0.3.202) - db2 (MySQL8.0) (10.0.3.246) - db3 (MySQL8.0) (10.0.3.175) - db4 (MySQL8.0) (10.0.3.26) - web1 (Apps) (10.0.3.159) |

Introduction

This article explains how to create a MySQL8.0 HA cluster using group replication and MySQL router. It is important to note that I am using an Ubuntu 20.04 LTS server edition as the LXC host OS and have created 4 Ubuntu 20.04 containers in it and installed MySQL 8.0 from the repositories. The containers have private IPs and they are not reachable on public network. An nginx reverse proxy is setup on the LXC host server so that applications, setup on the containers, are accessible on port 443 or port 80. 'How to setup Nginx as a reverse Proxy' can be found here.

Environment preparation

Container details are mentioned below. Host entries have been made into respective servers so that the servers can reach every other server using a hostname instead of an IP.

| # | Container/Host | Hostname | IP Address | Software installed |

|---|---|---|---|---|

| 1 | LXC Host | infrabase1 | 10.1.65.9 | lxc, nginx, mysql-shell |

| 2 | Container 1 | db1 | 10.0.3.202 | mysql server 8.0 |

| 3 | Container 2 | db2 | 10.0.3.246 | mysql server 8.0 |

| 4 | Container 3 | db3 | 10.0.3.175 | mysql server 8.0 |

| 5 | Container 4 | db4 | 10.0.3.26 | mysql server 8.0 |

| 6 | Container 5 | web1 | 10.0.3.159 | mysql-client, apache2, php7.4, wordpress, mysqlrouter |

Wordpress will be setup on web1 container and mysqlrouter will also be placed on the same application server. Wordpress will connect to the mysqlrouter like a local mysql db instance. Mysqlrouter will route it to the necessary mysql server in the cluster.

The lxc host server (10.1.65.9) will be used to connect to the db servers using mysql-shell. Group replication implementation will be done using the mysql-shell only. No files will be manually edited. Installing mysql-shell will be explained in the below sections.

Preparing MySQL Servers

The below steps have to be done on all three servers without exception

Install mysql server 8.0

- Install from the default repositories

root@db1:/# apt install mysql-server nano

- Run secure installation

root@db1:/# mysql_secure_installation

If you are doing a POC, answer 'N' for the first question. It asks if password complexity should be enabled. You can keep short passwords so that it is simpler to try out in a POC. For a production environment you should enable password complexity. Answer 'Y' for all further questions. This will disable remote 'root login' on the server and also remove anonymous users and test databases from the MySql server.

Create user for cluster management

Login to sql server and create a user and grant all permissions. Since this is a POC, you can be a bit liberal with the permissions, otherwise checkout the documentation on permissions for group replication user on MySQL website.

root@db1:/# mysql -u root -p mysql> CREATE USER 'clustermgr'@'%' IDENTIFIED BY '123'; mysql> GRANT ALL PRIVILEGES ON *.* TO 'clustermgr'@'%'WITH GRANT OPTION; mysql> FLUSH PRIVILEGES; mysql> EXIT;

Allow remote database connection

root@db1:/# nano /etc/mysql/mysql.conf.d/mysqld.cnf

Comment the bind address directive so that this database can be connected from other sources.

. #bind-address = 127.0.0.1 .

Repeat the above steps in the other servers, exactly in the same manner. Do not change password of the user or the username. In POC keep it same otherwise they can be different.

Setting up MySQL Shell

We shall be setting up the mysql shell on the LXC host server (10.1.65.9) from where the lxc containers running the mysql servers are reachable. Group replication will be setup using the mysql-shell program. Group replication can also be setup without the mysql-shell, however several modifications are required in the configuration files. Mysql-shell automates the configuration and helps in managing the cluster as well.

It is important to note that Ubuntu 20.04 does not have a mysql-shell binary in the default apt repository. However, it is available as a snap. If you install mysql-shell snap, then javascript is not enabled by default for the mysql-shell installed through snap. For setting up mysql cluster mysql-shell needs javascript. We therefore download the mysql-shell deb file from mysql website and manually install it on LXC host server.

kedar@infrabase1:~$ wget https://dev.mysql.com/get/Downloads/MySQL-Shell/mysql-shell_8.0.20-1ubuntu20.04_amd64.deb kedar@infrabase1:~$ sudo dpkg -i mysql-shell_8.0.20-1ubuntu20.04_amd64.deb

Once it is installed, you should be able to connect to the db servers using 'clustermgr' credentials. Use the following command

kedar@infrabase1:~$ mysqlsh

It should look like below

Setting up the cluster

Prepare servers to add in cluster

- Log into the first database server(db1) through the mysql shell from the lxc host server (10.1.65.9).

kedar@infrabase1:~$ mysqlsh

At the shell enter the following

MySQL-js> dba.checkInstanceConfiguration('clustermgr@db1:3306')

MySQL-js> dba.configureLocalInstance('clustermgr@db1:3306')

By pressing ^D, you can exit the mysql-shell

Ssh into the mysql server and restart the db1 server. Check the status of the server and ensure mysql server is running.

root@db1:/# systemctl restart mysql root@db1:/# systemctl status mysql

Repeat these steps for all of the remaining servers

Create the cluster

First DB Server

Connect to the first db server using the mysql shell from the lxc host server

kedar@infrabase1:~$ mysqlsh

MySQL-js> shell.connect('clustermgr@db1:3306')

MySQL| db1:3306 ssl -js> cluster = dba.createCluster('myCluster');

MySQL| db1:3306 ssl -js> cluster.status()

It will appear as below

{

"clusterName": "myCluster",

"defaultReplicaSet": {

"name": "default",

"primary": "db1:3306",

"ssl": "REQUIRED",

"status": "OK_NO_TOLERANCE",

"statusText": "Cluster is NOT tolerant to any failures.",

"topology": {

"db1:3306": {

"address": "db1:3306",

"mode": "R/W",

"readReplicas": {},

"replicationLag": null,

"role": "HA",

"status": "ONLINE",

"version": "8.0.20"

}

},

"topologyMode": "Single-Primary"

},

"groupInformationSourceMember": "db1:3306"

}

Second DB Server

Connect to the second db server using the mysql shell from the lxc host server

MySQL|db1:3306 ssl|JS> cluster.addInstance('clustermgr@db2:3306');

MySQL| db1:3306 ssl -js> cluster.status()

The cluster status will appear as below

{

"clusterName": "myCluster",

"defaultReplicaSet": {

"name": "default",

"primary": "db1:3306",

"ssl": "REQUIRED",

"status": "OK_NO_TOLERANCE",

"statusText": "Cluster is NOT tolerant to any failures.",

"topology": {

"db1:3306": {

"address": "db1:3306",

"mode": "R/W",

"readReplicas": {},

"replicationLag": null,

"role": "HA",

"status": "ONLINE",

"version": "8.0.20"

},

"db2:3306": {

"address": "db2:3306",

"mode": "R/O",

"readReplicas": {},

"replicationLag": null,

"role": "HA",

"status": "ONLINE",

"version": "8.0.20"

}

},

"topologyMode": "Single-Primary"

},

"groupInformationSourceMember": "db1:3306"

}

Third DB Server

Connect to the third db server using the mysql shell from the lxc host server

MySQL|db1:3306 ssl|JS> cluster.addInstance('clustermgr@db3:3306');

MySQL| db1:3306 ssl -js> cluster.status()

Check cluster status

The cluster status will appear as below

{

"clusterName": "myCluster",

"defaultReplicaSet": {

"name": "default",

"primary": "db1:3306",

"ssl": "REQUIRED",

"status": "OK",

"statusText": "Cluster is ONLINE and can tolerate up to ONE failure.",

"topology": {

"db1:3306": {

"address": "db1:3306",

"mode": "R/W",

"readReplicas": {},

"replicationLag": null,

"role": "HA",

"status": "ONLINE",

"version": "8.0.20"

},

"db2:3306": {

"address": "db2:3306",

"mode": "R/O",

"readReplicas": {},

"replicationLag": null,

"role": "HA",

"status": "ONLINE",

"version": "8.0.20"

},

"db3:3306": {

"address": "db3:3306",

"mode": "R/O",

"readReplicas": {},

"replicationLag": null,

"role": "HA",

"status": "ONLINE",

"version": "8.0.20"

}

},

"topologyMode": "Single-Primary"

},

"groupInformationSourceMember": "db1:3306"

}

All three servers are added in the cluster. You can add the fourth one too in the same manner. At any given point in time, only one server will be primary and the remaining would be in read-only mode. In case of a failure, one of the two servers will become primary and will be in read/write mode and the other one will be in a read-only mode.

MySql Router & App Server

Setting up MySQL router

- Install mysql router on the application server container (10.0.3.159)

root@web1:/# apt install mysql-router root@web1:/# /bin/mysqlrouter --user=root --bootstrap clustermgr@db1:3306 -d myrouter

After some time, mysqlrouter will have been installed and a folder created by the name of myrouter. In our case, we are running mysqlrouter with the user root, hence the folder will be created in "/"

root@web:/# cd myrouter root@web1:/myrouter# ls -l

Following content will be shown

root@web1:/myrouter# ls -l total 28 drwx------ 2 root root 4096 Jun 8 17:48 data drwx------ 2 root root 4096 Jun 8 17:48 log -rw------- 1 root root 1211 Jun 8 17:48 mysqlrouter.conf -rw------- 1 root root 80 Jun 8 17:48 mysqlrouter.key drwx------ 2 root root 4096 Jun 8 17:48 run -rwx------ 1 root root 277 Jun 8 17:48 start.sh -rwx------ 1 root root 137 Jun 8 17:48 stop.sh

Start the mysqlrouter with the following command

root@web1:/myrouter# ./start.sh

Mysql router has now been set and is ready to accept connections

Setting up App Server

- As a sample, we shall be setting up a wordpress site that will be served using an apache web server installed on this container in addition to the mysql-router.

- There is a detailed howto on 'Setting up Wordpress' that can be found here.

- When setting the database for wordpress, mention the database host as

Database host: 10.0.3.159:6446

All other remaining settings remain the same for configuring the wordpress instance. All read & write requests will be made to the mysqlrouter and depending on the available database, mysqlrouter will route the requests to the database cluster. Any change in the database server (like a failure or rejoin) will automatically get updated in the mysqlrouter configuration.

Important Commands

Check cluster status

Login through the mysql-shell to any of the servers in the cluster and run following comamnds.

kedar@infrabase1:/$ mysqlsh

MySQL -js> shell.connect('clustermgr@db1:3306')

MySQL db1:3306 ssl |JS> var cluster = dba.getCluster()

MySQL db1:3306 ssl |JS> cluster.status()

Shutting down the cluster

Shut down the read-only databases first. Assuming that db1 is the primary (read/write database)

root@db3:/# systemctl stop mysql root@db2:/# systemctl stop mysql root@db1:/# systemctl stop mysql

Starting cluster after a shut down

If the cluster is started after a clean shutdown, ensure that mysql service is running on all the cluster servers. Login to any server you want to make primary using the mysql-shell. In this case, will connect to db1 using mysql-shell from the lxc host server (10.1.65.9)

kedar@infrabase1:$ mysqlsh

MySQL -js> shell.connect('clustermgr@db1:3306')

MySQL db1:3306 ssl |JS> cluster = dba.rebootClusterFromCompleteOutage()

MySQL db1:3306 ssl |JS> cluster.status()

Switching between Single-Primary & Multi-primary

By default only one primary will be present in the cluster while all will be read-only. To make all other servers primary use the following command

MySQL db1:3306 ssl |JS> cluster.switchToMultiPrimaryMode() MySQL db1:3306 ssl |JS> cluster.status()

The status should be seen as below

{

"clusterName": "myCluster",

"defaultReplicaSet": {

"name": "default",

"ssl": "REQUIRED",

"status": "OK",

"statusText": "Cluster is ONLINE and can tolerate up to ONE failure.",

"topology": {

"db1:3306": {

"address": "db1:3306",

"mode": "R/W",

"readReplicas": {},

"replicationLag": null,

"role": "HA",

"status": "ONLINE",

"version": "8.0.20"

},

"db2:3306": {

"address": "db2:3306",

"mode": "R/W",

"readReplicas": {},

"replicationLag": null,

"role": "HA",

"status": "ONLINE",

"version": "8.0.20"

},

"db3:3306": {

"address": "db3:3306",

"mode": "R/W",

"readReplicas": {},

"replicationLag": null,

"role": "HA",

"status": "ONLINE",

"version": "8.0.20"

}

},

"topologyMode": "Multi-Primary"

},

"groupInformationSourceMember": "db1:3306"

}

In this case if the application connects to port 6447, the connection will be load balanced, however if the application connects to port 6446, it will work only with the first primary server.

To switch back to the single-primary mode, and make db1 as the primary database

MySQL db1:3306 ssl |JS> cluster.switchToSinglePrimaryMode("db1:3306");

Add a node to the cluster

This will add db4 to the existing cluster of three servers

MySQL db1:3306 ssl |JS> cluster.addInstance("db4:3306")

Remove a node from the cluster

MySQL db1:3306 ssl |JS> cluster - dba.getCluster()

MySQL db1:3306 ssl |JS> cluster.removeInstance("db4:3306")

Add slave server

In our case, we shall add db5 as slave of db2 server which is the cluster. It is assumed that db5 is a ubuntu 20.04 server with the same mysql server (8.0.20) installed on it. This server should not be part of the cluster when this activity is started. Add the configuration to the below file

root@db5:/# nano /etc/mysql/mysql.conf.d/mysqld.cnf

Add the following lines at the end of the file

server-id = 25 # this should be unique gtid-mode = ON enforce-gtid-consistency = ON log-slave-updates = OFF read-only = ON super-read-only = ON expire-logs-days = 15

Save the file and

root@db5:/# systemctl restart mysql

On one of the cluster servers, create a replication slave user and take a full backup of all the databases and move it to the db5 server. We shall be using db2 for this purpose. ssh into db2

root@db2:/# mysql -u root -p mysql> CREATE USER 'repl'@'%' IDENTIFIED BY '123'; mysql> GRANT REPLICATION SLAVE ON *.* TO 'repl'@'%'; mysql> EXIT; root@db2:/# mysqldump - u root -p --single-transaction --master-data=1 --all-databases --triggers --routines --events > backup.sql

Move the file to the db5 server. On db5 server restore the backup file

root@db5:/# mysql -uroot -p < backup.sql

Initiate the replication and start the slave

mysql> CHANGE MASTER TO MASTER_HOST = '10.0.3.246', MASTER_USER = 'repl', MASTER_PASSWORD = '123', MASTER_AUTO_POSITION = 1; mysql> START SLAVE; mysql> SHOW SLAVE STATUS\G;

References

Website references

- https://dev.mysql.com/doc/refman/5.7/en/mysql-innodb-cluster-working-with-cluster.html

- https://severalnines.com/database-blog/mysql-innodb-cluster-80-complete-operation-walk-through-part-two

- https://dev.mysql.com/doc/mysql-router/8.0/en/mysql-router-deploying-bootstrapping.html

- https://dev.mysql.com/doc/mysql-router/8.0/en/mysqlrouter.html#option_mysqlrouter_conf-use-sockets

- https://santhinesh.wordpress.com/tag/mysql-router/

- https://dinfratechsource.com/2019/07/22/mysql-innodb-cluster-a-hands-on-tutorial/

- https://lefred.be/content/mysql-innodb-cluster-mysql-shell-starter-guide/

- https://dinfratechsource.com/2018/11/10/mysql-innodb-cluster-a-complete-high-availability-solution-for-mysql/

- https://mysqlserverteam.com/mysql-innodb-cluster-real-world-cluster-tutorial-for-ubuntu-and-debian/

Video references

- Part 1: https://youtu.be/CbmNWAxgMf0

- Part 2: https://youtu.be/ZPJ1KiYN4bQ

- Part 3: https://youtu.be/wT66wDpsJbE

- Part 4: https://youtu.be/s1Gm_CTdRuI

Closing

MySQL router should be installed on all application servers so that required redundancy can be achieved.